You don’t have to tear down your monolith to modernize it. You can evolve it into a beautiful microservice using cloud-native technologies.

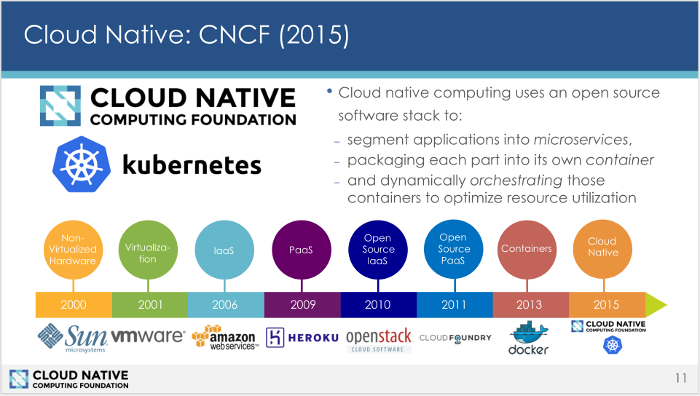

Fast forward to 2006. Amazon popularized the concept of Infrastructure as a Service (IaaS) with Amazon Web Services and its Elastic Compute Cloud (EC2). You no longer had to buy your own hardware. You didn’t even have to worry about running and managing virtual machines that run your applications. You were literally renting the computing environment and underlying infrastructure needed to run your services. You paid by the hour, like renting a conference room. This allowed companies to optimize resources to reduce costs and buy only as much computing power as they needed. It was revolutionary and led to an astounding decline in the cost of computing.

Three years after that, Heroku came up with the idea of Platform as a Service (PaaS). PaaS operated a layer above EC2 by removing administration of the virtual operating system. Heroku magically simplified deploying a new version of your application; now you only needed to type git push heroku. Some of the best-known web companies originated on Heroku.

These advances made deploying applications at any scale—large or small—much easier and more affordable. They led to the creation of many new businesses and powered a radical shift in how companies treated infrastructure, moving it from a capital expense to a variable operating expense.

As good as it all sounds, there was one big problem. All these providers were proprietary companies. It was vendor lock-in. Moving applications between the various environments was difficult. And mixing and matching on-premises and cloud-based applications was nearly impossible.

That’s when open source stepped in. It was Linux all over again, except the software was Docker and Kubernetes, the Linux of the cloud.

Open source to the rescue

Emerging in 2013, Docker popularized the concept of containers. The name “containers” echoes the global revolution when shipping companies replaced ad hoc freight loading with large metal boxes that fit on ships, trucks, and rail cars. The payload didn’t matter. The box was the standard.

Similar to shipping containers, Docker containers create a basic computing wrapper that can run on any infrastructure. Containers took the world by storm. Today, nearly all enterprises are planning future applications on top of container infrastructures—even if they are running them on their own private hardware. It’s just a better way to manage modern applications, which are often sliced and diced into myriad components (microservices) that need to be easily moved around and connected.This led to another problem. Managing containers taxed DevOps teams like nothing before. It created a step change in the number of moving parts and the dynamic activities around application deployment. Enter Kubernetes.

In 2015, Google released Kubernetes as an open source project. It was an implementation of Google’s internal system called Borg. Google and the Linux Foundation created the Cloud-Native Computing Foundation (CNCF) to host Kubernetes (and other cloud-native projects) as an independent project governed by a community around it. Kubernetes quickly became one of the fastest growing open source projects in history, growing to thousands of contributors across dozens of companies and organizations.

What makes Kubernetes so incredible is its implementation of Google’s own experience with Borg. Nothing beats the scale of Google. Borg launches more than 2-billion containers per week, an average of 3,300 per second. At its peak, it’s many, many more. Kubernetes was born in a cauldron of fire, battle-tested and ready for massive workloads.

1_cncf-history.png

The Linux Foundation, CC BY 3.0

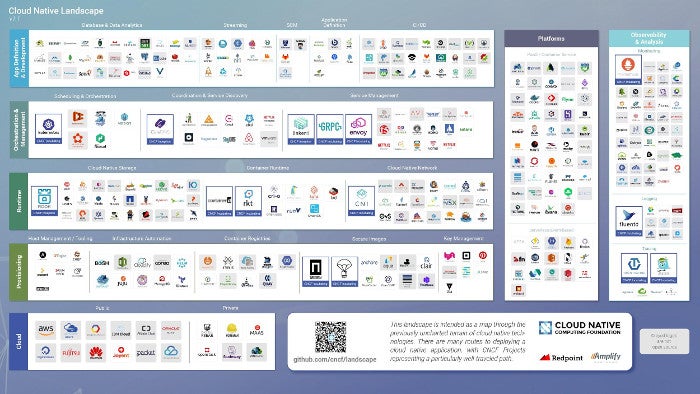

Building on the core ideas of Dockers and Kubernetes, CNCF became the home to many other cloud-native projects. Today there are more than 369 big and small projects in the cloud-native space. The critical cloud-native projects hosted by the CNCF include Kubernetes, Prometheus, OpenTracing, Fluentd, Linkerd, gRPC, CoreDNS, rkt, containerd, Container Networking Interface, CoreDNS, Envoy, Jaeger, Notary, The Update Framework, Rook, and Vitess.

2_cncf-landscape.jpg

The Linux Foundation, CC BY 3.0

However, learning from the mistakes of other open source projects, CNCF has been extra careful to ensure it selects only those technologies that work well together and can meet enterprises’ and startups’ needs. These technologies are enjoying mass adoption.

One of the biggest reasons companies flock to open source technologies is to avoid vendor lock-in and be able to move their containers across clouds and private infrastructure. With open source, you can easily switch vendors, or you can use a mix of vendors. If you have skills, you can manage your stack yourself.

Slicing off pieces of the monolith

Kubernetes and containers didn’t only change the ability to manage at scale, but also to take massive, monolithic applications and more easily slice them into more manageable microservices. Each service can be managed to scale up and down as needed. Microservices also allow for faster deployments and faster iteration in keeping with modern continuous integration practices. Kubernetes-based orchestration allows you to improve efficiency and resource utilization by dynamically managing and scheduling these microservices. It also adds an extraordinary level of resiliency. You don’t have to worry about container failure, and you can continue to operate as demand goes up and down.

Kubernetes has become the leading choice for cloud-native orchestration. It’s also one of the highest velocity development projects in the history of open source and is backed by major players including AWS, Microsoft, Red Hat, SUSE, and many more.

All of this has a direct impact on businesses. According to Puppet’s 2016 State of DevOps report, high-performing cloud-native architectures can have much more frequent developments, shorter lead times, lower failure rates, and faster recovery from failures. That means features get to market faster, projects can pivot faster, and engineering and developer teams wait around a lot less. Today, if you are building a new application from scratch, the cloud-native application architecture is the right way to do it. Equally important, cloud-native thinking provides a roadmap for how to take existing (brownfield) applications and slowly convert them into more efficient and resilient microservices-based architectures running on containers and Kubernetes. Brownfield, monolithic applications make up the majority of all software products today.

Monoliths are the antithesis of cloud-native. They are expensive, inflexible, tightly coupled, and brittle. The question is: how to break these monoliths into microservices? You might want to rewrite all your large legacy applications. The fact is, most rewrites end in failure. The first system, which you are trying to rewrite, is alive and evolving even as you try to replace it. Sometimes that first system evolves too quickly, and you can never catch up.

You can solve this problem in a more efficient manner. First, stop adding significant new functionality to your existing monolithic applications. There is a concept of “lift and shift.” This means you can take a large application that requires gigabytes of RAM and wrap a container around it. Simple.

Examples of transitioning from monolithic to containers

Ticketmaster is a good example of that approach. It has code running on a PDP-11. It created a PDP-11 emulator running inside a Docker container to be able to containerize that legacy application. There is a specific technology for Kubernetes, called Stateful Sets (formerly known as PetSets), that allows you to lock a container to one piece of hardware to make sure that it has active performance.

Ticketmaster had a unique problem: Whenever it put tickets up for sale, it was essentially launching a distributed denial of service (DDoS) attack against itself because of all the people coming in. The company needed a set of frontend servers that could scale up and handle that demand rather than trying to write it in their legacy application. Instead, it deployed a new set of microservice containers in front of the legacy app for this purpose, minimizing ongoing sprawl in its legacy architecture.

As you are trying to move your workload from legacy applications to containers, you may also want to move some functionality from the application into microservices or use microservices to add new functionality, rather than add to the old codebase. For example, if you want to add OAuth functionality, there may be a simple Node.js application you can put in front of your legacy app. If you have a highly performance-sensitive task, you can write it in Golang and set it up as an API-driven service residing in front of your legacy monolith. You will still get API calls back to your existing legacy monolith.

These new functionalities can be written in more modern languages by different teams that can work with their own set of libraries and dependencies and start splitting up that monolith.

KeyBanc in North Carolina is a great example of this approach. It deployed Node.js application servers in front of its legacy Java application to handle mobile clients. This was simpler and more efficient than adding to legacy code and helped future-proof its infrastructure.

True cloud-native is a constellation of complementary projects

If you are moving into cloud-native technology, you should consider a constellation of complementary projects to deliver core functionality. For example, among the biggest priorities in a cloud-native environment are monitoring, tracing, and logging. You can go with Prometheus, OpenTracing, and Fluentd. Linkerd is a service mesh that supports more complicated versions of routing. gRPC is an extremely high-performance API system that can replace JSON responses for applications that need higher throughput. CoreDNS is a service-discovery platform. These are all part of CNCF today, and we expect to add more projects to our set of complementary solutions.

Greenfield, brownfield, any field can be cloud-native

When you are migrating legacy monoliths to cloud-native microservices, you don’t need to go greenfield and rewrite everything. CNCF technologies like Kubernetes love brownfield applications. There is an evolutionary path that almost every enterprise and company out there should be on. You can stand up a brand-new application in Kubernetes or steadily evolve your monolith into a beautiful mesh of cloud-native microservices that will serve you for years to come.

Learn more from members of the cloud-native community presenting at KubeCon + CloudNativeCon EU, May 2-4, 2018, in Copenhagen. See the session schedule for details.