AWS released A1 Instance Types at re:Invent 2018. Honestly, I didn’t think much about this announcement. I would see the ads for them when logging into the AWS console, and folks seem to be excited about them. So I started digging into what this whole A1 Instance type was all about. It didn’t take long to realize why it is a big deal. The A1 Instances will mean more server choices. More choices mean more competition, which is always better for consumers. This means faster, less expensive, and even better servers for us.

More Competition is Good

The big deal about A1 instances is that it uses a CPU that has been built by AWS themselves. The CPU has a cool name: the Graviton Processor.

Imagine you are AWS and you have to go out there and buy the hardware and CPUs to power the AWS data centers. You currently have two main choices: Intel and AMD. With the A1 Instance type, AWS has added themselves as an option. So now there are three total choices: Intel, AMD, and AWS.

This move reminds me of AWS Certification Manager, ACM. With the Cert industry, companies charge hundreds to thousands of dollars a year for an SSL cert with a good reputation. We will pay for it because there are no better choices. Companies have been in the industry for years, built up their status, and we’ll go with a vendor name that everyone knows. However, then comes along AWS and decides to provide certs for free. Boom! It’s an added choice. On top of the certs being free, they are easy to set up and ready in minutes. Other providers now also have to make it easier and lower prices. Having more choices is simpler better for customers.

This is what is happening with the server CPU industry. By adding another option on the table for a CPU provider, it’ll foster innovation and lead to cost savings. AWS is already throwing out a 42% savings number with the A1 instances running their Graviton processors.

Cost Comparison

When I heard that just by switching to A1 instances I could save up to 42%, I went out there and looked it up for myself to confirm. For quick pricing comparisons, the ec2instances.info site is helpful.

Bad Comparison

I hastily compared the A1 instances to the T3 instance types. Here’s a table:

| A1 | T3 | Memory | A1 Price | T3 Price | Difference |

|---|---|---|---|---|---|

| a1.large | t3.medium | 4GB | $37.23/mo | $30.37/mo | -$6.86/mo (-23%) |

| a1.xlarge | t3.large | 8GB | $74.46/mo | $60.74/mo | -$13.72/mo (-23%) |

| a1.2xlarge | t3.xlarge | 16B | $148.92/mo | $121.47/mo | -$27.45/mo (-23%) |

| a1.4xlarge | t3.2xlarge | 32GB | $297.84/mo | $242.94/mo | -$54.90/mo (-23%) |

NOTE: These prices were taken on 12/16/2018. Please check the AWS pricing page for the most up-to-date pricing as prices will change.

Bummer… I thought is that these servers were supposed to save money?

I was confused by the numbers. What was I missing? It did not take long to realized that I was missing something obvious. Naively, I was only looking at RAM during my quick assessment. I was comparing Apples to Oranges.

Here are tables with the CPU included for a more careful examation:

| Instance Type | Memory | CPU | On-Demand Montly Cost |

|---|---|---|---|

| a1.large | 4GB | 2CPU | $37.23/mo |

| a1.xlarge | 8GB | 4CPU | $74.46/mo |

| a1.2xlarge | 16B | 8CPU | $148.92/mo |

| a1.4xlarge | 32GB | 16CPU | $297.84/mo |

| Instance Type | Memory | CPU | On-Demand Montly Cost |

|---|---|---|---|

| t3.medium | 4GB | 2CPU 4h 48m burst | $30.37/mo |

| t3.large | 8GB | 4CPU 7h 12m burst | $60.74/mo |

| t3.xlarge | 16B | 4CPU 9h 36m burst | $121.47/mo |

| t3.2xlarge | 32GB | 8CPU 9h 36m burst | $242.94/mo |

You see, T3 instances do not give you the same CPU. They have burstable CPU, and sometimes even fewer cores, so it’s an unfair comparison. T3 instances are great, inexpensive, and I use them for development purposes all the time. In production and at scale though, I usually use a variety of other instance types and T3 instances usually do not make that list.

A Better Comparison

A much better and actual fair comparison is to compare these A1 instances to the C5 instance types, these instance types have comparable memory and CPU.

| A1 | C5 | Memory | CPU | A1 Price | C5 Price | Difference |

|---|---|---|---|---|---|---|

| a1.large | c5.large | 4GB | 2CPU | $37.23/mo | $62.05/mo | $24.82/mo (40%) |

| a1.xlarge | c5.xlarge | 8GB | 4CPU | $74.46/mo | $124.10/mo | $49.64/mo (40%) |

| a1.2xlarge | c5.2xlarge | 16B | 8CPU | $148.92/mo | $248.20/mo | $99.28/mo (40%) |

| a1.4xlarge | c5.4xlarge | 32GB | 16CPU | $297.84/mo | $496.40/mo | $198.56/mo (40%) |

There we go! The numbers make much more sense and a 40% savings calculation is close to the said 42%. Saving 40% of your AWS bill by simply switching instances types would be a major win.

Performance Boast

There’s actually a lot more benefits to the A1 instances than just price. Here’s what that stood out to me. The Graviton Processor is built on top of the new Nitro System. The Nitro Hypervisor manages CPU and memory much better, making its performance indistinguishable from running bare-metal! Benchmarks from Brendan Gregg of Netflix have shown less than 1% of a difference. The A1 instance types also have an Elastic Network Adapter (ENA) paired with NVMe tech that makes network and EBS speed even faster: Amazon EC2 Nitro System Based Instances Now Support Faster Amazon EBS-Optimized Instance Performance. Essentially, AWS engineers have tuned and optimized these machines specifically to be blazing fast in the Cloud world.

The Catch?

My next thought:

Well there’s probably some catch.

The Graviton Processor is an ARM-based, not x86-based, processor. This means that the machine code that your code compiles down to will be different. So you must re-compile your software to the ARM CPU architecture. AWS says that they have gone to great lengths to make sure that open source software compiles on ARM processors just fine: Introducing Amazon EC2 A1 Instances Powered By New Arm-based AWS Graviton Processors

Most applications that make use of open source software like Apache HTTP Server, Perl, PHP, Ruby, Python, NodeJS, and Java easily run on multiple processor architectures due to the support of Linux based operating systems.

More importantly, AWS has been emphasizing that containerized microservices work well on these A1 instances with the ARM-based processors. In theory, this means we just need to build the Docker image on the ARM instance, and then we should be good to go. Since the Docker build script is already all codified in the Dockerfile already, it should be very little work to realize the 40%savings. Simply ship the Docker container onto the instance.

The Test

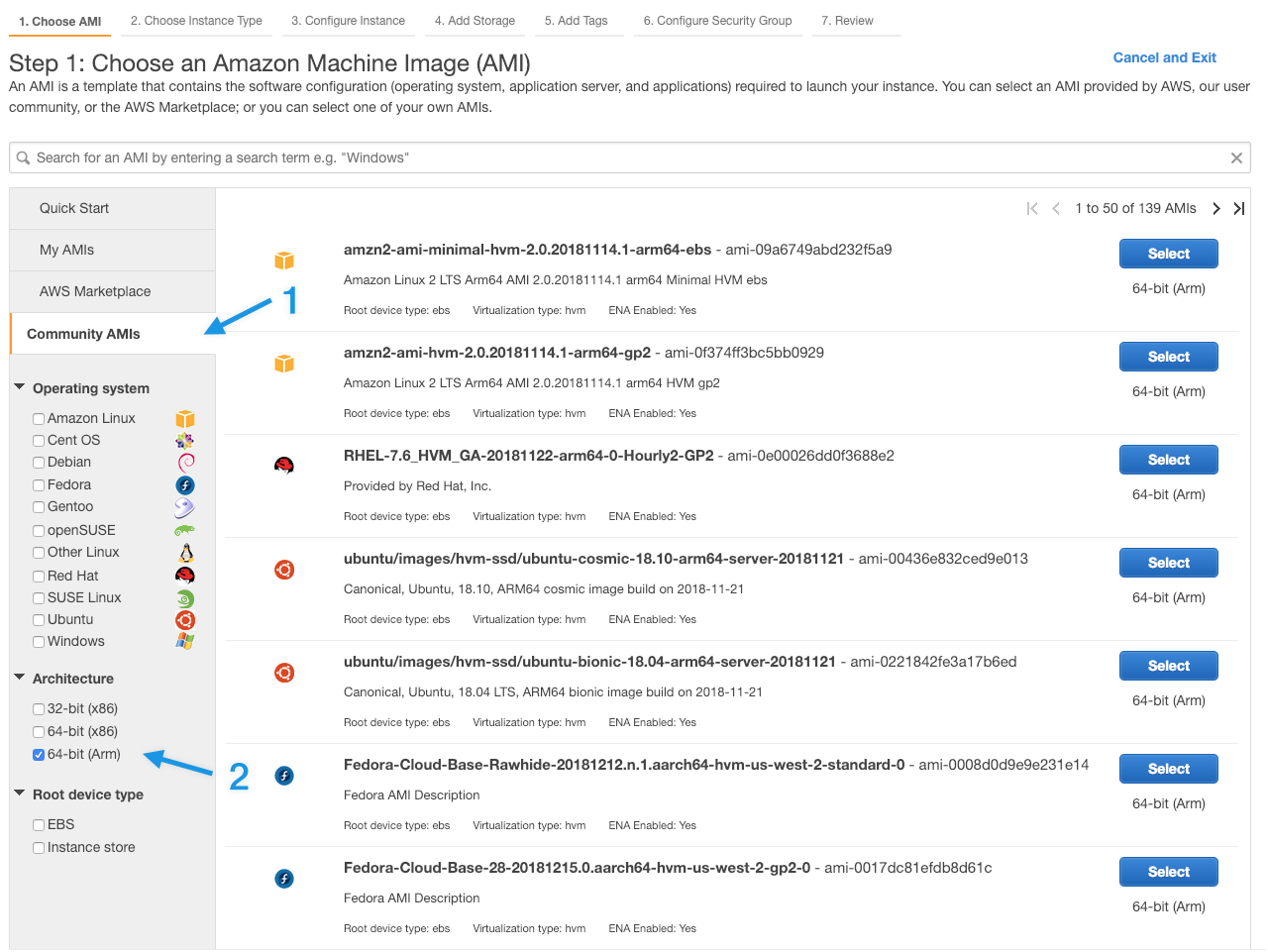

The first step to test these ARM instances is to find an AMI that has already been built with it. A quick way to do this is to use the EC2 Instance Launch Wizard Step 1:

- Click on the Community AMIs Menu

- Check the 64-bit (Arm) checkbox

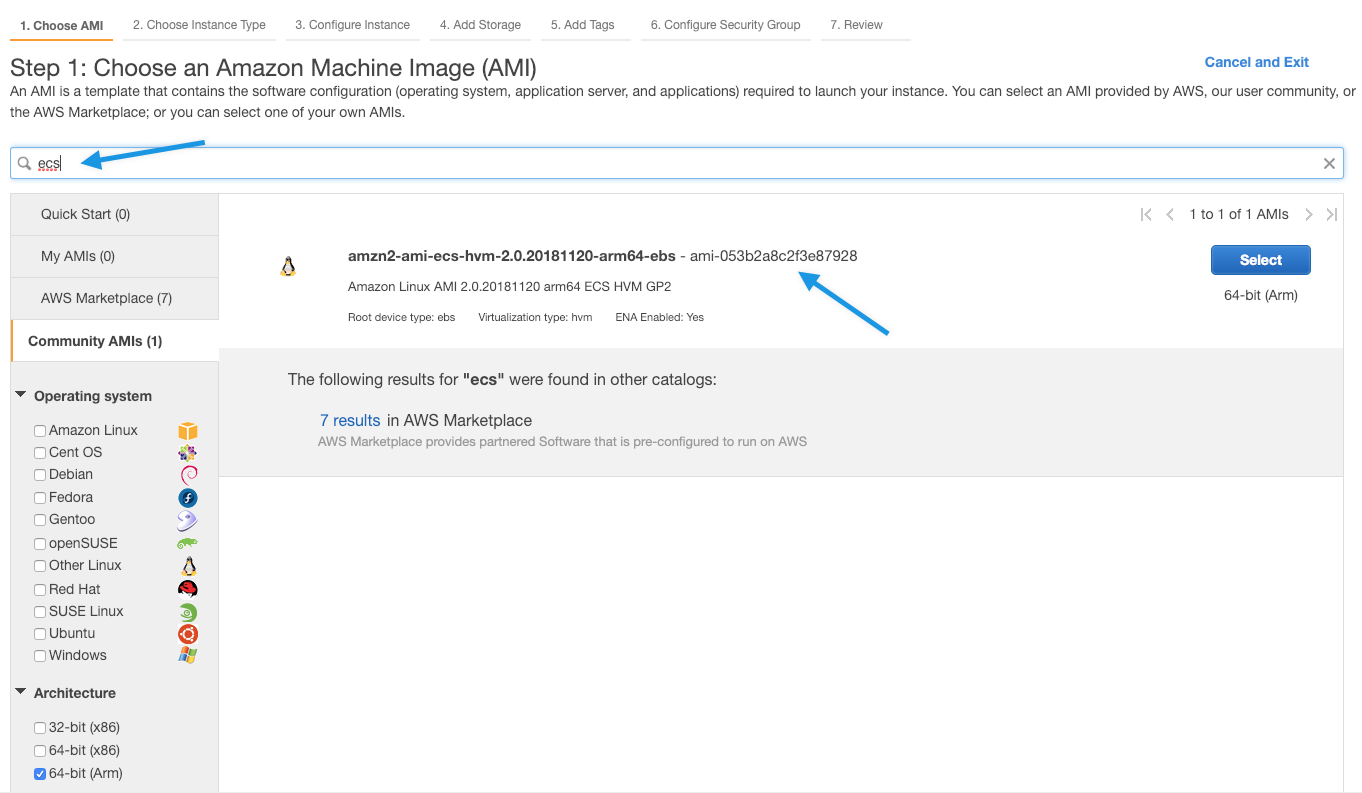

You will see a long list of AMIs with different OSes like AmazonLinux2, RedHat, Ubuntu, Fedora, etc. AWS has already done a lot of work for us. We can launch any of those instances to test. For my purposes, I am most interested in running Docker containers on the A1 instances. So I searched for ECS and found the ECS AMI:

The cool thing about using the ECS AMI is that it already has Docker installed. So we can quickly rebuild our Docker image and see if it works. I’m testing a Sinatra app:

First I tested the current tongueroo/sinatra:latest Docker image on Dockerhub that was previously compiled on the x86 architecture. We should expect this not to work since it’s a different architecture:

$ ssh [email protected]

$ docker run --rm -ti tongueroo/sinatra bash

standard_init_linux.go:190: exec user process caused "exec format error"

$As expected we get an error. We need to rebuild the Docker image.

It Just Works

Let’s rebuild the Docker image with an arm tag. Here are the commands:

$ sudo yum install -y git

$ git clone https://github.com/tongueroo/sinatra

$ cd sinatra

$ docker build -t tongueroo/sinatra:arm .

$ docker run --rm -ti tongueroo/sinatra:arm bash

root@81537649bd01:/app# ruby hi.rb

[2018-12-16 02:18:29] INFO WEBrick 1.4.2

[2018-12-16 02:18:29] INFO ruby 2.5.3 (2018-10-18) [aarch64-linux]

== Sinatra (v2.0.4) has taken the stage on 4567 for development with backup from WEBrick

[2018-12-16 02:18:29] INFO WEBrick::HTTPServer#start: pid=6 port=4567

Success! All we literally have to do is rebuild it and it just worked! CTRL-C to exit out of the container and let’s test more thoroughly by curling the Sinatra app.

$ docker run --rm -d -p 4567:4567 tongueroo/sinatra:arm

76d0b66e2d8995c36d07473da46e4ea15ed0fcda38e95cf83fa85ae3ded5b607

$ curl localhost:4567 ; echo

42

$The Sinatra app responds back successfully with 42, the meaning of life. That’s very little work for a 40% savings with a faster server.

Automating It with CloudFormation

Here’s a CloudFormation template that automates things. It’s written as a lono project. The full source code is on GitHub: tongueroo/arm-ec2-instance-tutorial. It just launches an ARM-based instance and runs the commands we’ve covered. Here is its user-data script: bootstrap.sh. The template also creates a security group with ports 22 and 4657 open for testing. It is pretty easy to fork the project and modify the bootstrap.sh to fit your needs.

IMPORTANT: You’ll need to adjust config/params/base/ec2.txt with a KeyName that is available on your AWS account. Here are the commands:

git clone https://github.com/tongueroo/arm-ec2-instance-tutorial

cd arm-ec2-instance-tutorial

bundle

lono cfn create ec2Note: The lono project is configured to add a random suffix at the end of the stack name so we can launch a bunch of stacks in rapid-fire fashion with the same command if needed.

If you prefer not to use lono and have the aws cli installed, you can also run:

aws cloudformation create-stack --stack-name=ec2-$(date +%s) --template-body file://raw/ec2.yml --parameters ParameterKey=KeyName,ParameterValue=defaultRemember to substitute default with your KeyName value. Also note, the date +%s command is used to add a random suffix.

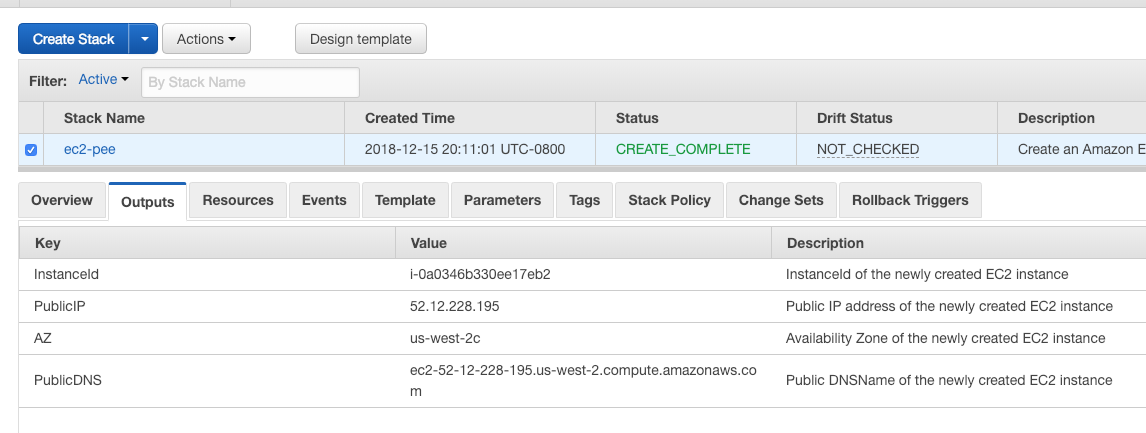

The CloudFormation output of the stack contains the server DNS so we can grab that for testing. It looks something like this:

After the stack is created you’ll have to wait about 2 minutes for the user-data bootstrap script to finish. You can ssh into the instance and check by looking at the /var/log/cloud-init-output.log file. Here’s an example of me checking:

$ ssh [email protected] # use your DNS name

$ tail /var/log/cloud-init-output.log

Successfully built b9d449d5de82

Successfully tagged tongueroo/sinatra:arm

+ docker run --rm -d -p 4567:4567 tongueroo/sinatra:arm

9248733d8e9511f3e9aae848e70073e41fb9cde3085ce0885337dc248fdd49ec

+ sleep 10

+ curl -s localhost:4567

42+ echo

+ uptime

Cloud-init v. 18.2-72.amzn2.0.6 finished at Sun, 16 Dec 2018 04:13:04 +0000. Datasource DataSourceEc2. Up 101.33 seconds

$We can see that the bootstrap script successfully curled localhost:4567 and finished in about 101 seconds. You should also able to curl it from its public DNS since the security group opens up the port for testing:

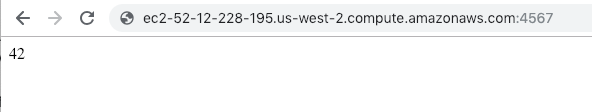

$ curl ec2-52-12-228-195.us-west-2.compute.amazonaws.com:4567 ; echo

42

$You can also view in the web browser:

That’s it! I didn’t think much about A1 Instance when they were announced, but after looking into them, they’ve certainly caught my attention. Super excited for more instance types to roll out with the Graviton processor onboard. It’s very little work to realize a 40% savings. So it’s worth it to take a look at the A1 instances and give them a try. Here is the full source code on GitHub again: tongueroo/arm-ec2-instance-tutorial.